https://www.youtube.com/watch?v=Jt5BS71uVfI

================================================================================

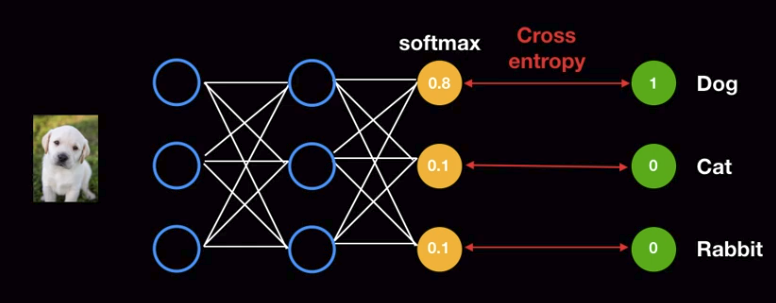

Cross Entropy:

- "optimal entropy value"

- which is calculated from the information which can be incorrect

- amount of uncertain information

================================================================================

Example of "information which can be incorrect"

- prediction from ML model

================================================================================

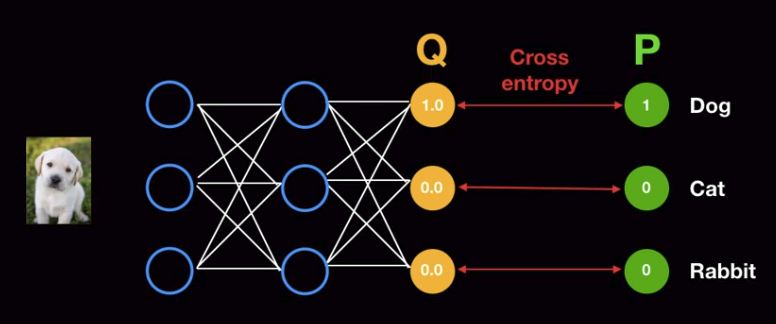

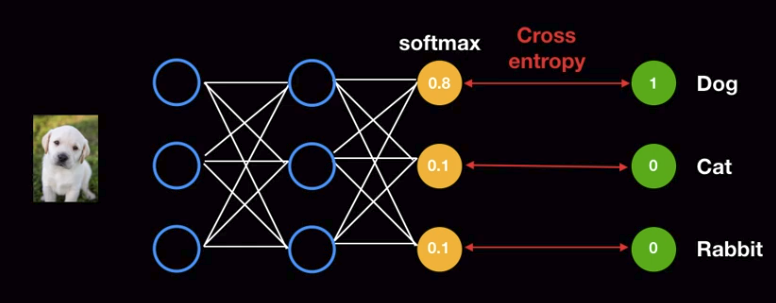

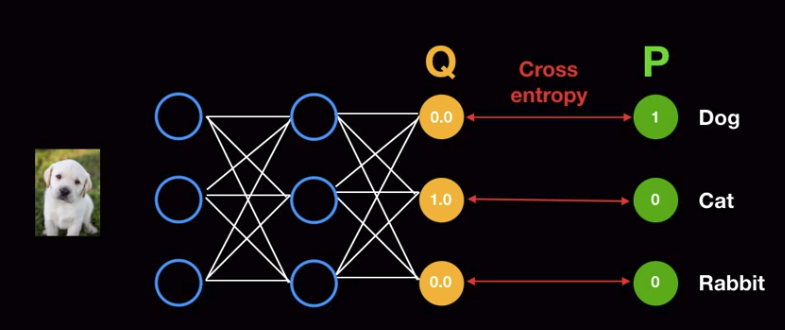

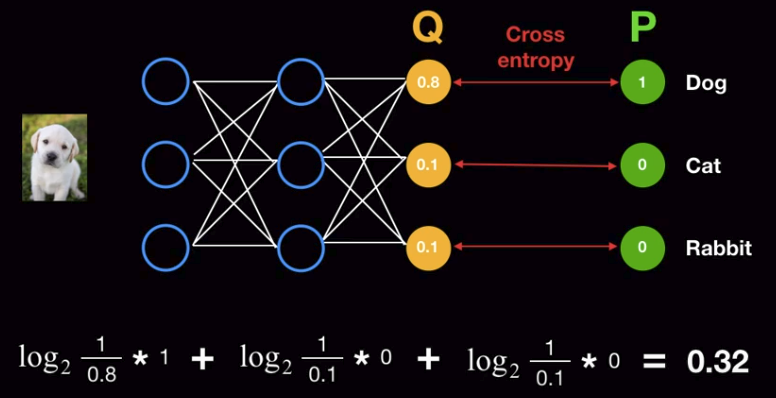

Q: estimated probability

P: true probability

================================================================================

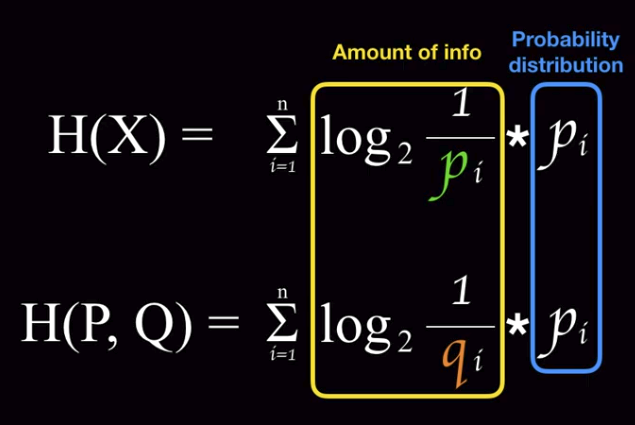

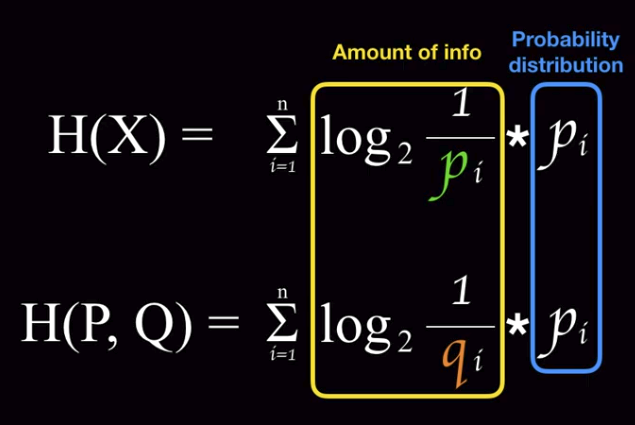

See entropy and cross entropy

Entropy

$$$H(X) = \sum\limits_{i=1}^{n} \log_2 {\dfrac{1}{p_i}} * p_i$$$

Cross Entropy

$$$H(P,Q) = \sum\limits_{i=1}^{n} \log_2{ \dfrac{1}{q_i} * p_i }$$$

Q: estimated probability

P: true probability

================================================================================

See entropy and cross entropy

Entropy

$$$H(X) = \sum\limits_{i=1}^{n} \log_2 {\dfrac{1}{p_i}} * p_i$$$

Cross Entropy

$$$H(P,Q) = \sum\limits_{i=1}^{n} \log_2{ \dfrac{1}{q_i} * p_i }$$$

Probability for true label (?)

Probability for true label (?)

Amount of information

================================================================================

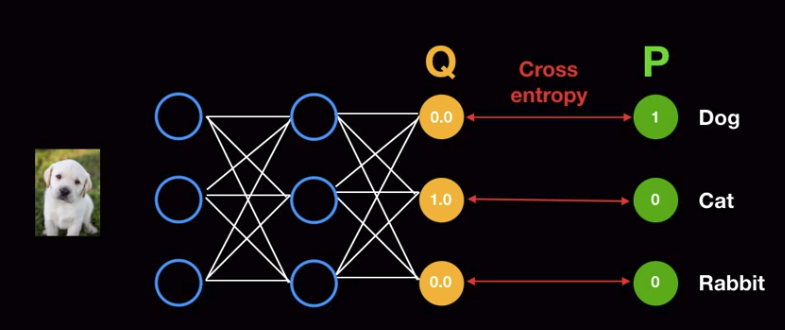

Case: prediction value has wrong probability

gets "infinity value"

Amount of information

================================================================================

Case: prediction value has wrong probability

gets "infinity value"

$$$\log_2{\dfrac{1}{0.0}} * 1

+ \log_2{\dfrac{1}{1.0}} * 0

+ \log_2{\dfrac{1}{0.0}} * 0$$$ = infinity

================================================================================

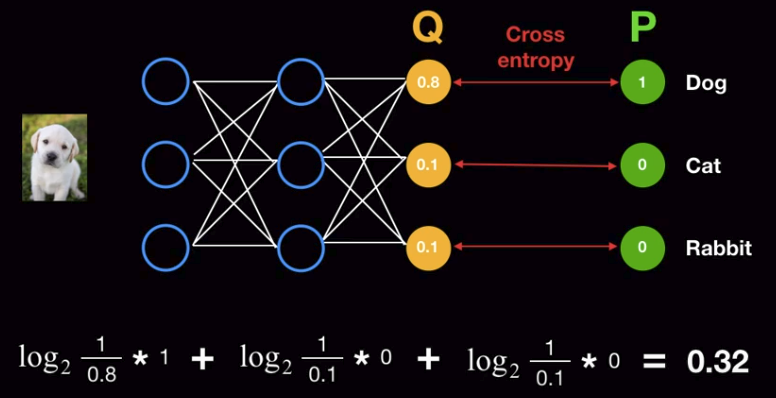

Case: trained model can release somewhat precise prediction

$$$\log_2{\dfrac{1}{0.0}} * 1

+ \log_2{\dfrac{1}{1.0}} * 0

+ \log_2{\dfrac{1}{0.0}} * 0$$$ = infinity

================================================================================

Case: trained model can release somewhat precise prediction

$$$\log_2{\dfrac{1}{0.8}} * 1

+ \log_2{\dfrac{1}{0.1}} * 0

+ \log_2{\dfrac{1}{0.1}} * 0$$$ = 0.32

================================================================================

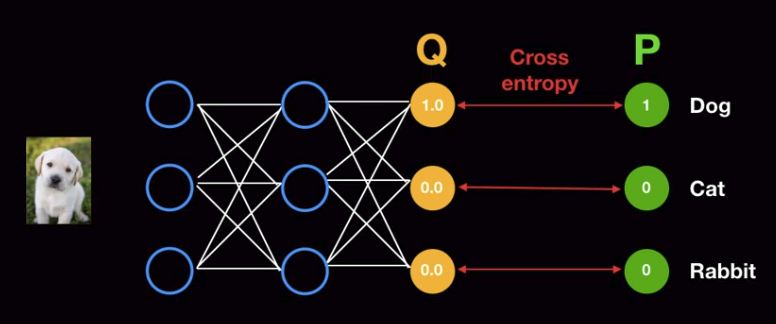

Case: trained model can release precise prediction

$$$\log_2{\dfrac{1}{0.8}} * 1

+ \log_2{\dfrac{1}{0.1}} * 0

+ \log_2{\dfrac{1}{0.1}} * 0$$$ = 0.32

================================================================================

Case: trained model can release precise prediction

$$$\log_2{\dfrac{1}{1.0}} * 1

+ \log_2{\dfrac{1}{0.0}} * 0

+ \log_2{\dfrac{1}{0.0}} * 0$$$ = 0.0

"Entropy value = Cross Entropy value" in this case

================================================================================

Cross Entropy $$$\ge$$$ Entropy

Entropy: information which is derived from "true label"

Cross Entropy: information which is derived from "true label" and "potentially-incorrect information"

Amount of information

Cross Entropy $$$\ge$$$ Entropy

================================================================================

$$$\log_2{\dfrac{1}{1.0}} * 1

+ \log_2{\dfrac{1}{0.0}} * 0

+ \log_2{\dfrac{1}{0.0}} * 0$$$ = 0.0

"Entropy value = Cross Entropy value" in this case

================================================================================

Cross Entropy $$$\ge$$$ Entropy

Entropy: information which is derived from "true label"

Cross Entropy: information which is derived from "true label" and "potentially-incorrect information"

Amount of information

Cross Entropy $$$\ge$$$ Entropy

================================================================================