================================================================================

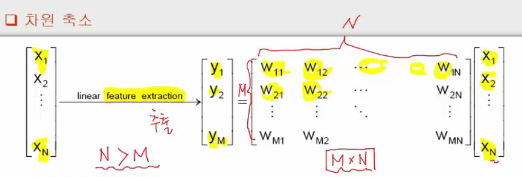

* Feature extraction

original_feature_vector=[x_1,x_2,...x_N]

dim_reduced_feat_vector=linear_feature_extraction(original_feature_vector)

print(dim_reduced_feat_vector)

# [y_1,y_2,...y_M]

================================================================================

* $$$X = f(X) = \mathbb{Y}^M = \mathbb{W}^{M\times N} \mathbb{X}^N$$$

* dim_reduced_feat_vector = transform_mat $$$\times$$$ original_feature_vector

================================================================================

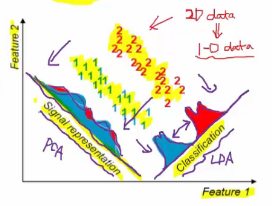

* W can be very various based on your purposes which feature you want to more preserve

* Purpose1: signal representation

- Precise data representaion in lower dimension

- W is found by PCA

* Purpose2: classification

- Easier classification in lower dimension

- W is found by LDA

================================================================================

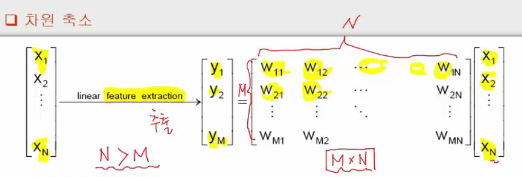

* $$$X = f(X) = \mathbb{Y}^M = \mathbb{W}^{M\times N} \mathbb{X}^N$$$

* dim_reduced_feat_vector = transform_mat $$$\times$$$ original_feature_vector

================================================================================

* W can be very various based on your purposes which feature you want to more preserve

* Purpose1: signal representation

- Precise data representaion in lower dimension

- W is found by PCA

* Purpose2: classification

- Easier classification in lower dimension

- W is found by LDA

================================================================================

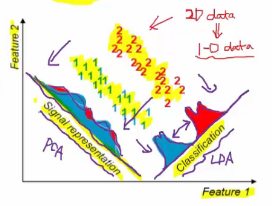

* 2D feature vector

* 1D feature vector for better signal representation using PCA

- Variance of each feature is preserved

* 1D feature vector for better classification using LDA

- Easier classification

* 2D feature vector

* 1D feature vector for better signal representation using PCA

- Variance of each feature is preserved

* 1D feature vector for better classification using LDA

- Easier classification