This is personal study note

Copyright and original reference:

www.youtube.com/watch?v=GJW6trVTDWM&list=PLbhbGI_ppZISMV4tAWHlytBqNq1-lb8bz

================================================================================

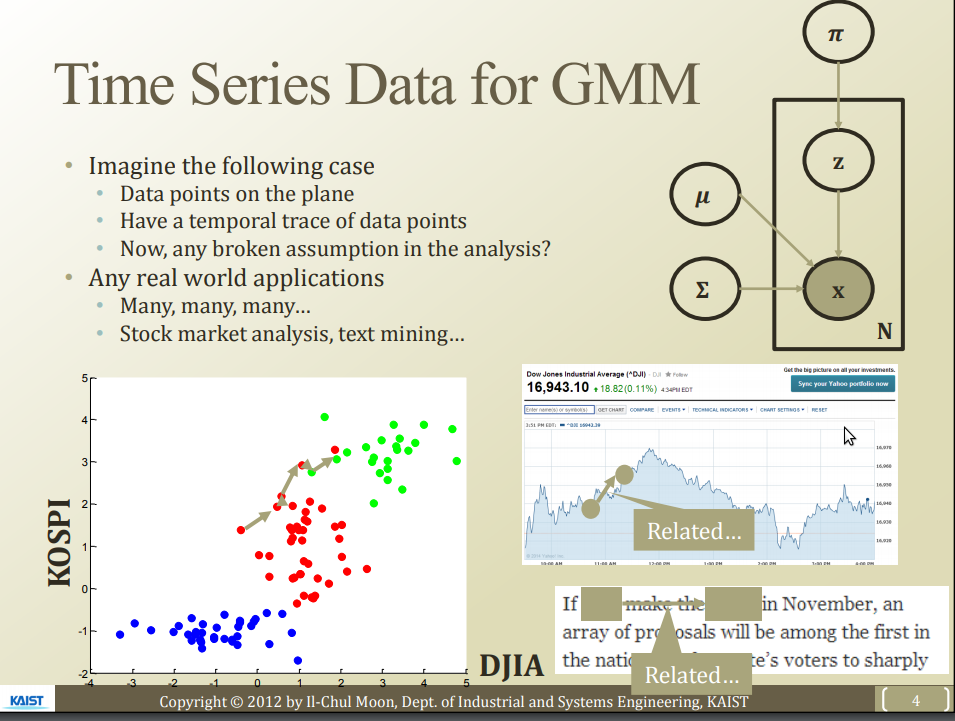

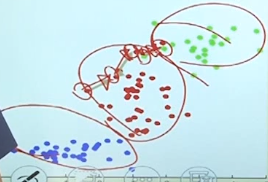

Gaussian Mixture Model

- In 2D, multiple points

- GMM finds clusters of those points

- GMM can be used in 2D, 3D, 4D, ...

================================================================================

Space and time are different thing

time

- irreversible

How you can model some algorithm with adding "time" component

================================================================================

- How HMM can be expressed?

- HMM can also be expressed by graphical model

- What is the major research questions in HMM?

- To inspect research questions with HMM, you should know how to calculate probabilities, how to inference probabilities

- Link HMM with previous lectures

- EM algorithm (which is used for GMM) is also used for HMM

================================================================================

- How HMM can be expressed?

- HMM can also be expressed by graphical model

- What is the major research questions in HMM?

- To inspect research questions with HMM, you should know how to calculate probabilities, how to inference probabilities

- Link HMM with previous lectures

- EM algorithm (which is used for GMM) is also used for HMM

================================================================================

================================================================================

================================================================================

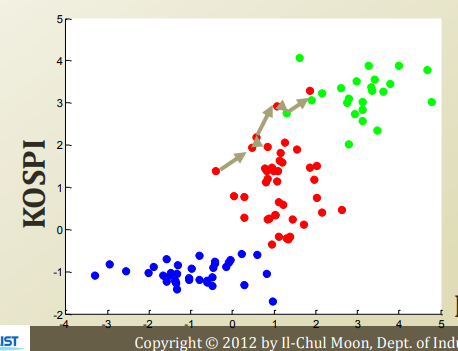

* Not considering "time information"

* It contains "spatial information"

* 3 clusters

================================================================================

* Not considering "time information"

* It contains "spatial information"

* 3 clusters

================================================================================

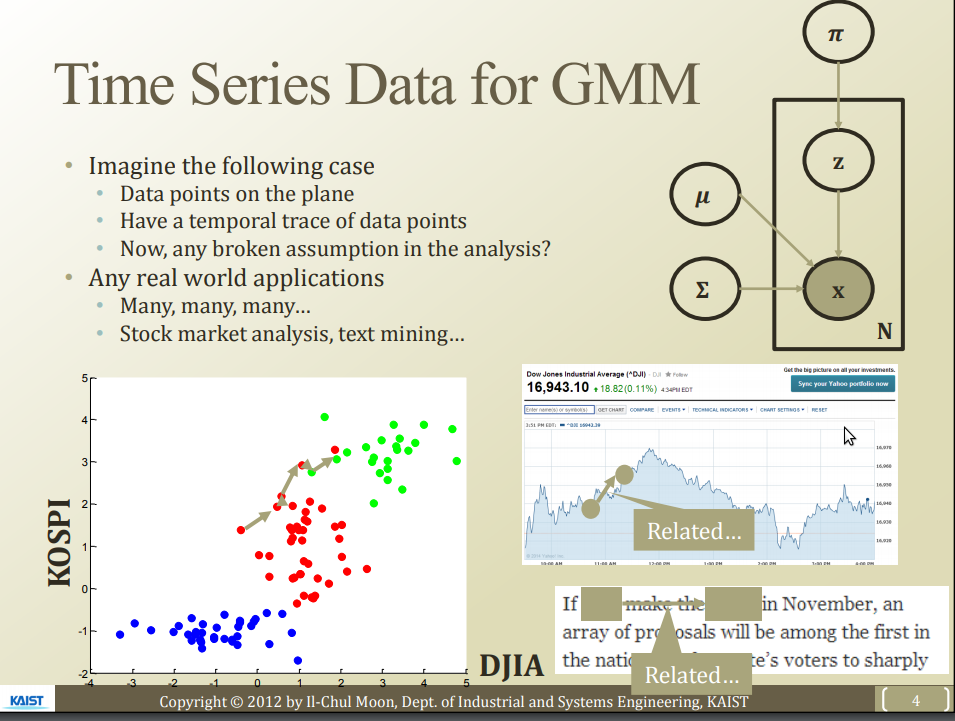

* What if points move based on time flow?

* Then, how should Gaussian Mixture Model change?

================================================================================

* What if points move based on time flow?

* Then, how should Gaussian Mixture Model change?

================================================================================

* "Moving point in time flow" is shown in various fields

* "Value at yesterday" and "value at today" has relationship

* In other words, "value at yesterday" affects "value at today"

* And there can be latent driving force (random variable) which moves those values

================================================================================

* "Moving point in time flow" is shown in various fields

* "Value at yesterday" and "value at today" has relationship

* In other words, "value at yesterday" affects "value at today"

* And there can be latent driving force (random variable) which moves those values

================================================================================

* Latent driving force can be changed, resulting in different value-pattern

================================================================================

Question:

It will be good if there is "time series based mixture model"

================================================================================

* Latent driving force can be changed, resulting in different value-pattern

================================================================================

Question:

It will be good if there is "time series based mixture model"

================================================================================

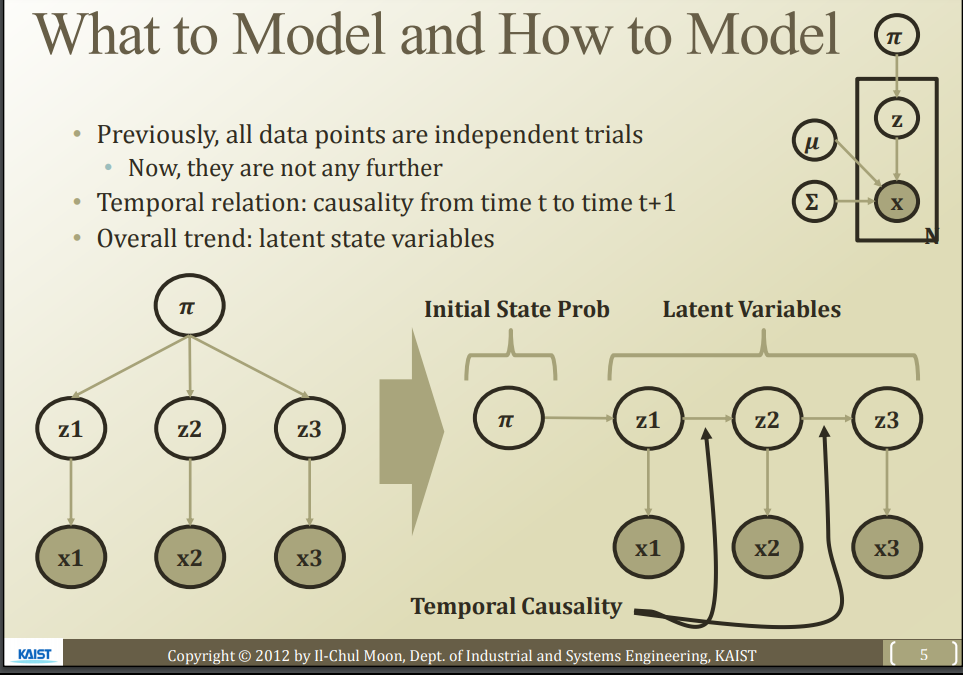

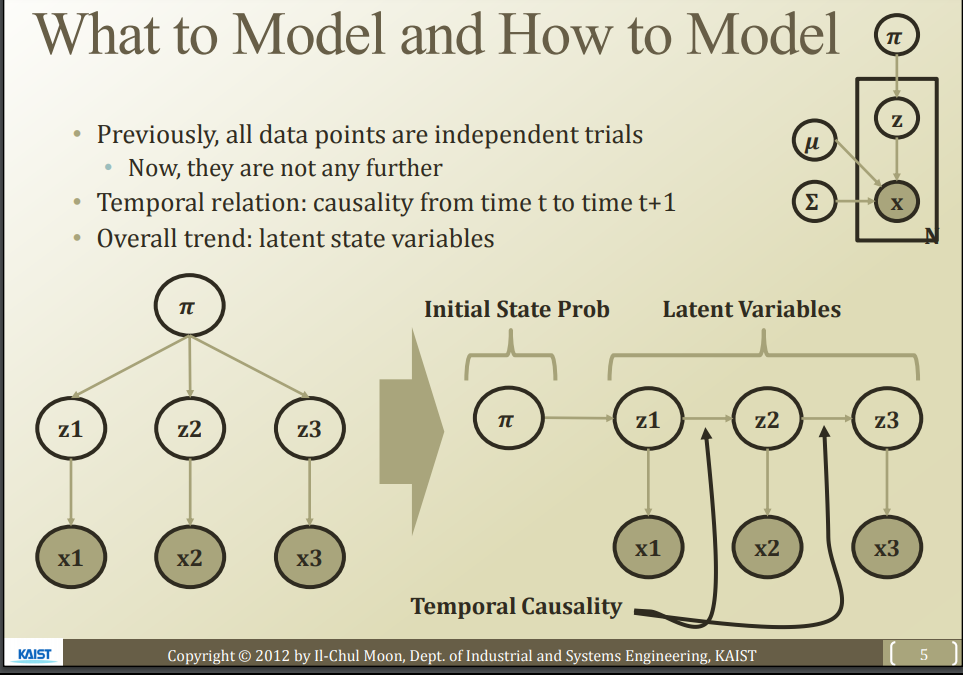

* Gaussian Mixture Model in plate notation

* $$$N$$$: N number of data point which is expressed via x

* $$$z$$$: latent factor which classifies N number of data point into clusters

* $$$\pi, \mu, \sigma$$$: parameters, contributes z and x

================================================================================

* Gaussian Mixture Model in plate notation

* $$$N$$$: N number of data point which is expressed via x

* $$$z$$$: latent factor which classifies N number of data point into clusters

* $$$\pi, \mu, \sigma$$$: parameters, contributes z and x

================================================================================

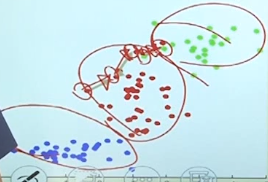

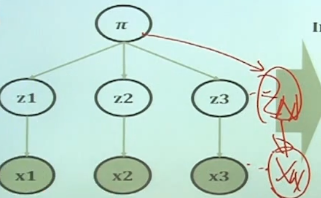

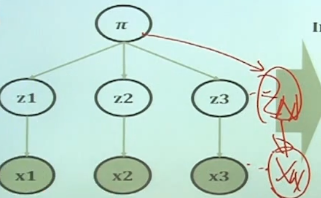

Unfolded view

* Parameter $$$\pi$$$ (which is modeled by multinomial distribution) affects latent factor z

* If parameter $$$\pi$$$ is fixed value, $$$z_1,z_2,\cdots,z_N$$$ are independent

* So, that case will not be good to expressen "time"

* So, above graphical model should be changed

================================================================================

Unfolded view

* Parameter $$$\pi$$$ (which is modeled by multinomial distribution) affects latent factor z

* If parameter $$$\pi$$$ is fixed value, $$$z_1,z_2,\cdots,z_N$$$ are independent

* So, that case will not be good to expressen "time"

* So, above graphical model should be changed

================================================================================

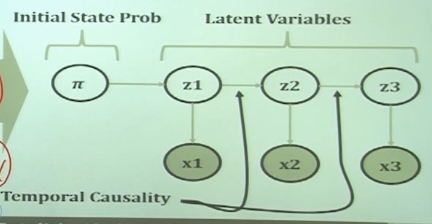

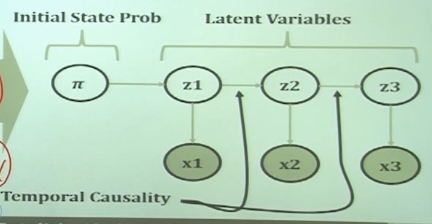

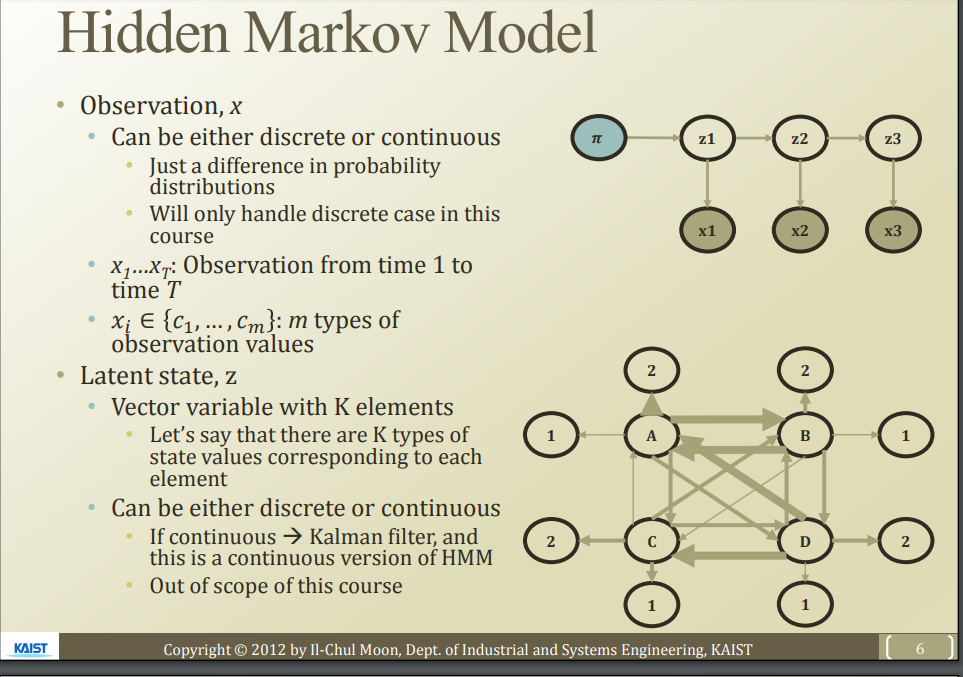

* Parameter $$$\pi$$$ affects $$$z_1$$$ (which is latent factor at time 1)

* $$$x_1$$$ is observed

* $$$z_1$$$ affects $$$z_2$$$ (next latent factor to consider "time information")

* This graphical model can model "the change of latent factors" in terms of time flow

================================================================================

"Hidden markov model" is the model where you considers GMM with "temporal causality"

Hidden Markov Model = dynamic clustering

================================================================================

* Parameter $$$\pi$$$ affects $$$z_1$$$ (which is latent factor at time 1)

* $$$x_1$$$ is observed

* $$$z_1$$$ affects $$$z_2$$$ (next latent factor to consider "time information")

* This graphical model can model "the change of latent factors" in terms of time flow

================================================================================

"Hidden markov model" is the model where you considers GMM with "temporal causality"

Hidden Markov Model = dynamic clustering

================================================================================

================================================================================

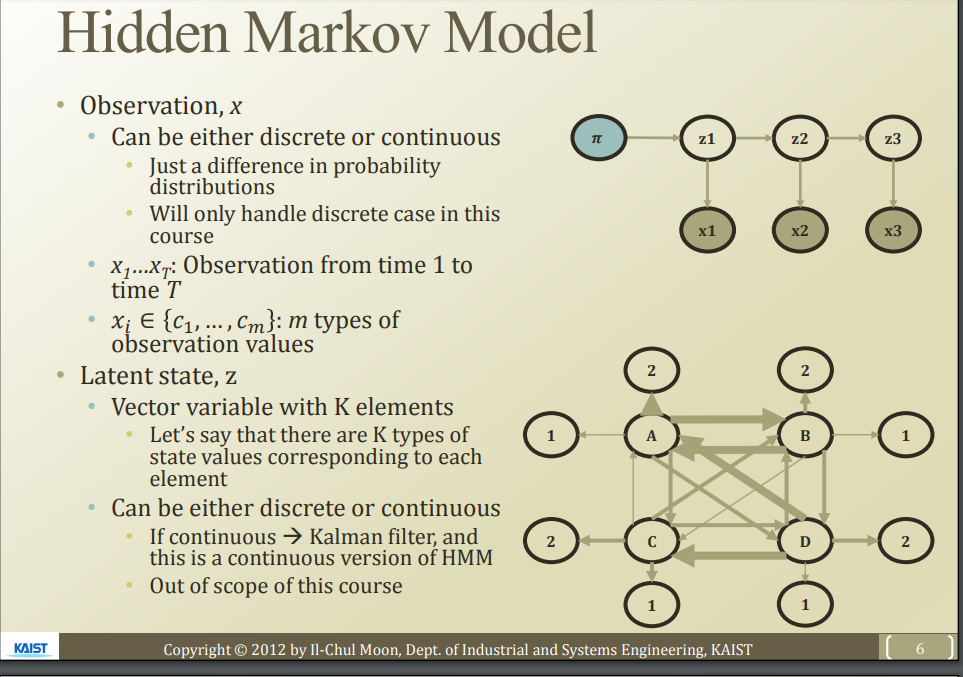

HMM in graphical model

================================================================================

HMM in graphical model

observarions: $$$ x_1, x_2, x_3$$$

observation: discret or continuous

Observation in GMM: continuous

================================================================================

observarions: $$$ x_1, x_2, x_3$$$

observation: discret or continuous

Observation in GMM: continuous

================================================================================

Above relation is modeled by $$$P(x_1|z_1)$$$

Probability function P uses Gaussian normal distribution: continuous random variable is modeled

Probability function P uses binomial or multinomial distribution: discrete random variable is modeled

Probability distribution is your choice

================================================================================

Above relation is modeled by $$$P(x_1|z_1)$$$

Probability function P uses Gaussian normal distribution: continuous random variable is modeled

Probability function P uses binomial or multinomial distribution: discrete random variable is modeled

Probability distribution is your choice

================================================================================

$$$x_1,x_2,\cdots,x_T$$$: observations (data points) which has temporal causality

$$$x_1$$$ is the thing before $$$x_2$$$

================================================================================

Each x can have multiple observations

$$$x_1,x_2,\cdots,x_T$$$: observations (data points) which has temporal causality

$$$x_1$$$ is the thing before $$$x_2$$$

================================================================================

Each x can have multiple observations

In other words, it means x can be as vector

================================================================================

Latent (invisible) factor z

Latent state z

Suppose there is k number of dynamical time groups

================================================================================

In other words, it means x can be as vector

================================================================================

Latent (invisible) factor z

Latent state z

Suppose there is k number of dynamical time groups

================================================================================

Suppose x_1 is involved in "1 time group"

Suppose there is no trend-change (no latent factor change)

Suppose x_2 is involved in "1 time group"

Suppose there is trend-change (latent factor change)

Suppose x_2 is involved in "2 time group"

================================================================================

Suppose x_1 is involved in "1 time group"

Suppose there is no trend-change (no latent factor change)

Suppose x_2 is involved in "1 time group"

Suppose there is trend-change (latent factor change)

Suppose x_2 is involved in "2 time group"

================================================================================

K elements: K number of components

================================================================================

Continuous latent factor from HMM case: Kalman filter method

================================================================================

K elements: K number of components

================================================================================

Continuous latent factor from HMM case: Kalman filter method

================================================================================

================================================================================

================================================================================

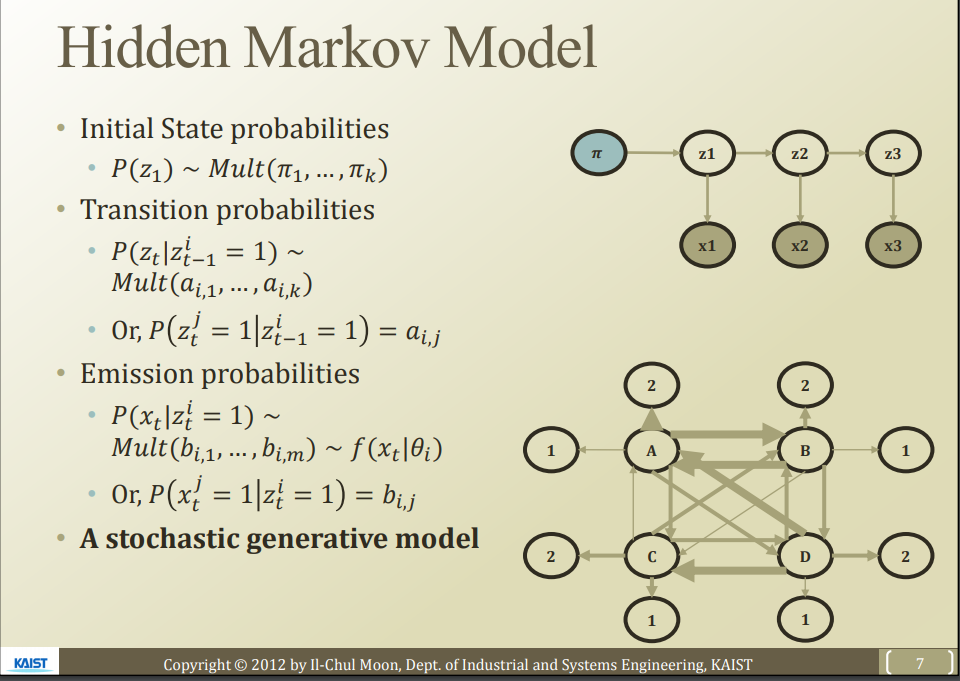

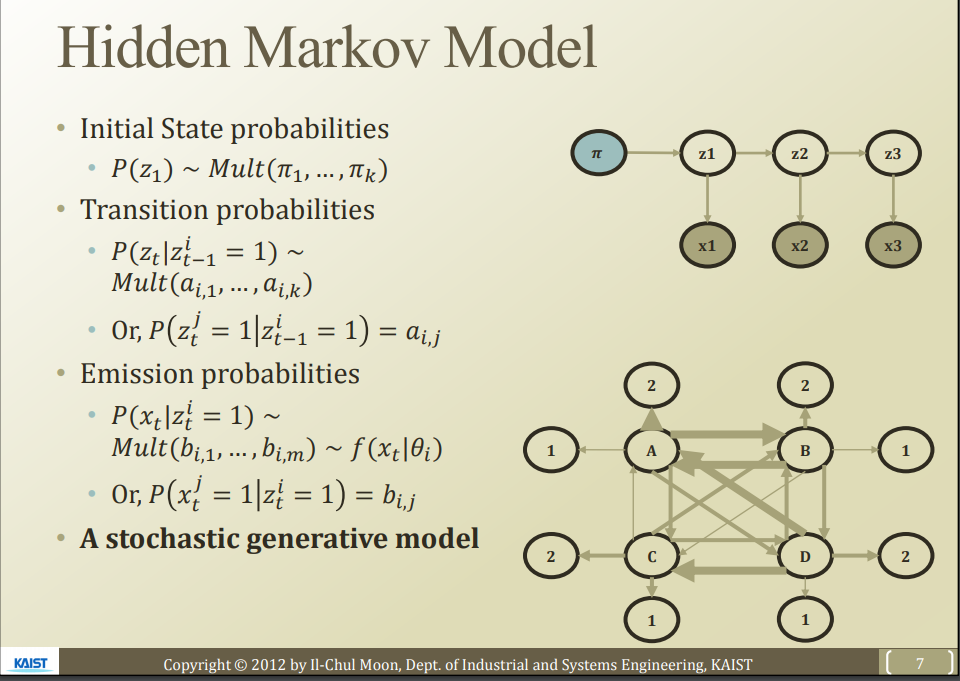

* Initial state probabilities

$$$P(z_1) \sim Mult(\{\pi_1,\pi_2,\cdots,\pi_N\})$$$

- First latent factor $$$z_1$$$ is sampled from Multinomial distribution

- That multinomial distribution has parameter $$$\pi$$$

- That means, to train HMM, you should infer that $$$\pi$$$

================================================================================

* Initial state probabilities

$$$P(z_1) \sim Mult(\{\pi_1,\pi_2,\cdots,\pi_N\})$$$

- First latent factor $$$z_1$$$ is sampled from Multinomial distribution

- That multinomial distribution has parameter $$$\pi$$$

- That means, to train HMM, you should infer that $$$\pi$$$

================================================================================

* Transition probabilities

- probability of $$$z_1$$$ to $$$z_2$$$

- $$$P(z_t|z_{t-1}^i=1) \sim Mult(\{\alpha_{i,1},\alpha_{i,2},\cdots,\alpha_{i,k}\})$$$

- at previous time, z is involved in 1, at current time, probability of cluster of z

- $$$z_{t-1}^i=1$$$: z's time cluster at previous time

- $$$z_t$$$: z's time cluster at current time

- Parameter $$$\alpha$$$ in Mult

- Other form

- $$$P(z_t^j=1|z_{t-1}^i=1) = \alpha_{i,j}$$$

$$$z_{t-1}^i=1$$$: z in i-th cluster is given

$$$z_t^j=1$$$: z in j-th cluster

================================================================================

* Transition probabilities

- probability of $$$z_1$$$ to $$$z_2$$$

- $$$P(z_t|z_{t-1}^i=1) \sim Mult(\{\alpha_{i,1},\alpha_{i,2},\cdots,\alpha_{i,k}\})$$$

- at previous time, z is involved in 1, at current time, probability of cluster of z

- $$$z_{t-1}^i=1$$$: z's time cluster at previous time

- $$$z_t$$$: z's time cluster at current time

- Parameter $$$\alpha$$$ in Mult

- Other form

- $$$P(z_t^j=1|z_{t-1}^i=1) = \alpha_{i,j}$$$

$$$z_{t-1}^i=1$$$: z in i-th cluster is given

$$$z_t^j=1$$$: z in j-th cluster

================================================================================

- Above edge is modeled by "emission probabilities"

$$$P(x_t|z_t^i=1) \sim Mult(b_{i,1},\cdots,b_{i,m}) \sim f(x_t|\theta_t)$$$

* $$$z_t^i$$$: latent factor z (in i-th cluster) is given

* $$$x_t$$$ visible observation x

* Since you suppose that you deal with discrete case,

* you use Multinomial function to model x

* b is parameter for Multinomial function

* m number of possible discrete observations

* How $$$b_{i,j}$$$ is modeled

- $$$P(x_t^j=1|z_t^i=1) = b_{i,j}$$$

- $$$z_t^i=1$$$ z: (in ith cluster) is given

- probability of j-th observation

================================================================================

- Above edge is modeled by "emission probabilities"

$$$P(x_t|z_t^i=1) \sim Mult(b_{i,1},\cdots,b_{i,m}) \sim f(x_t|\theta_t)$$$

* $$$z_t^i$$$: latent factor z (in i-th cluster) is given

* $$$x_t$$$ visible observation x

* Since you suppose that you deal with discrete case,

* you use Multinomial function to model x

* b is parameter for Multinomial function

* m number of possible discrete observations

* How $$$b_{i,j}$$$ is modeled

- $$$P(x_t^j=1|z_t^i=1) = b_{i,j}$$$

- $$$z_t^i=1$$$ z: (in ith cluster) is given

- probability of j-th observation

================================================================================

- Suppose A, B, C, D are latent factors

- State A ---> State B ---> State C .... based on probability, state changes

- Suppose A, B, C, D are latent factors

- State A ---> State B ---> State C .... based on probability, state changes

- Each state can express 1 or 2 as observations based on probability

================================================================================

Example

State: professor is in anger

You: you can't actually know professor's mind (state, and state is hidden)

And via, for example, facial expression of professor, you can see the observation emitted from professor's mind state

- (Mind) state of professor (latent factor z) can change (or does transition as time goes)

- Professor's facial expression always looks changing

But (inner mind of professor) latent factor can stay in same state

- In conclusion, the main characterisitc of HMM is that

as time goes, "latent factors z" and "observations which are emited from latent factor z" are separated

- Each state can express 1 or 2 as observations based on probability

================================================================================

Example

State: professor is in anger

You: you can't actually know professor's mind (state, and state is hidden)

And via, for example, facial expression of professor, you can see the observation emitted from professor's mind state

- (Mind) state of professor (latent factor z) can change (or does transition as time goes)

- Professor's facial expression always looks changing

But (inner mind of professor) latent factor can stay in same state

- In conclusion, the main characterisitc of HMM is that

as time goes, "latent factors z" and "observations which are emited from latent factor z" are separated