This is personal study note

Copyright and original reference:

https://www.youtube.com/watch?v=Rt6_3_GEbCY&list=PLbhbGI_ppZISMV4tAWHlytBqNq1-lb8bz&index=59

================================================================================

In HMM, decoding problem is complicated from factorization on full joint probability

In decoding problem:

too complicated equation = factorize(full joint probability distribution)

recursive but simple form = trick(too complicated equation)

================================================================================

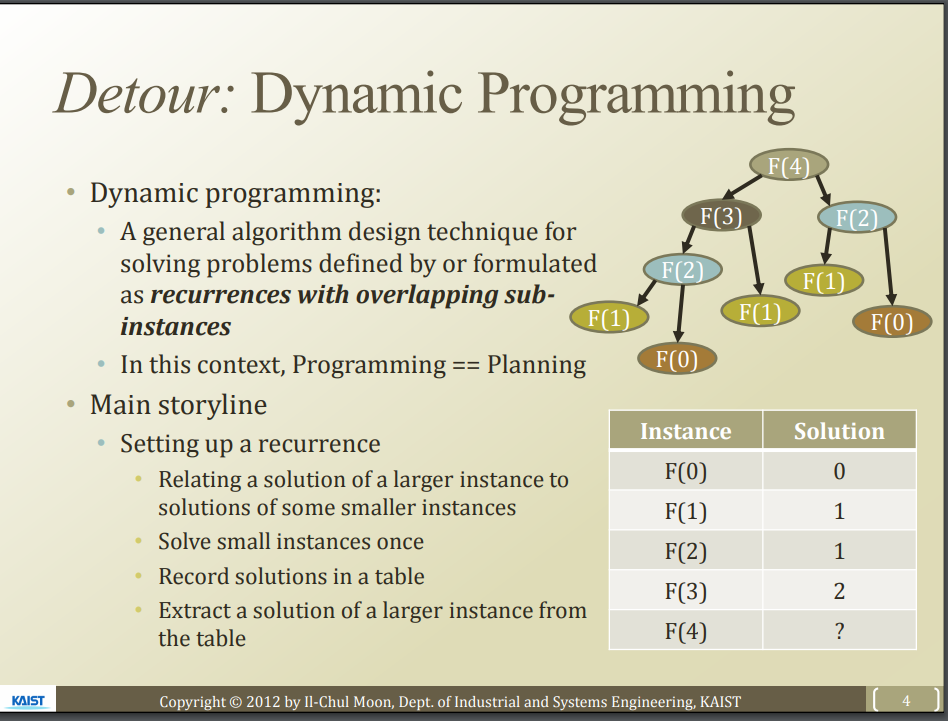

solve.by_recursion(repeating problem)

solve.by_dynamic_programming(repeating problem)

================================================================================

computation.recycle(recurrences with overlapping subinstances)

================================================================================

computation.recycle(recurrences with overlapping subinstances)

================================================================================

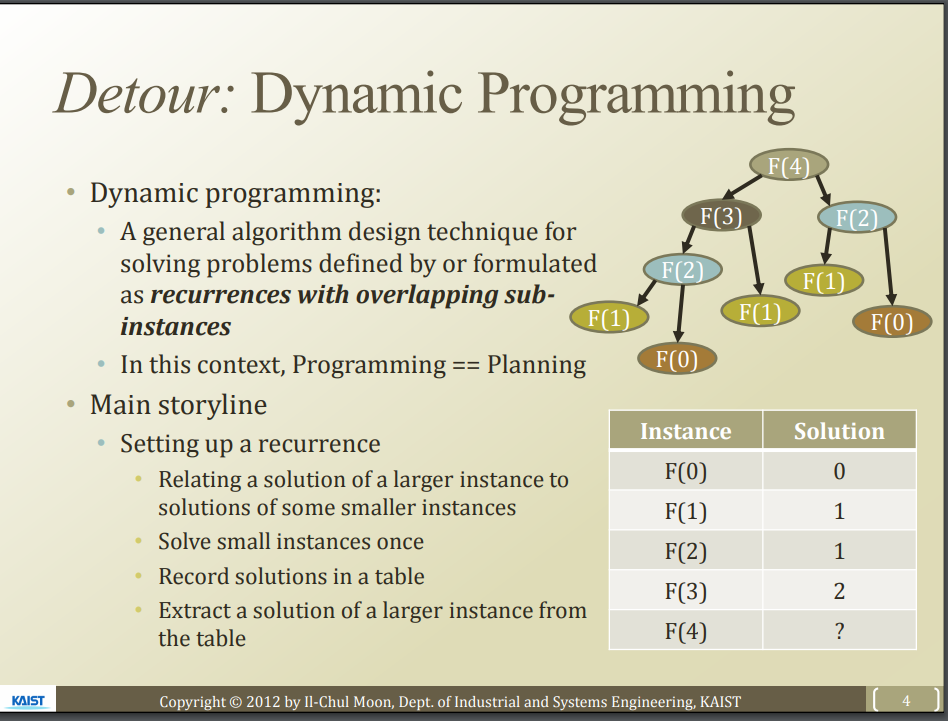

F(): fibonacci sequence

F(4) = F(3) + F(2) = ....

F(3): 1 compuation

F(2): 2 compuations

F(1): 3 compuations

F(0): 2 compuations

repeating problem.solve.by_using(recursive calculation)

: too much computation and time by iterative computation

================================================================================

Then, use "dynamic programming"

================================================================================

F(): fibonacci sequence

F(4) = F(3) + F(2) = ....

F(3): 1 compuation

F(2): 2 compuations

F(1): 3 compuations

F(0): 2 compuations

repeating problem.solve.by_using(recursive calculation)

: too much computation and time by iterative computation

================================================================================

Then, use "dynamic programming"

================================================================================

================================================================================

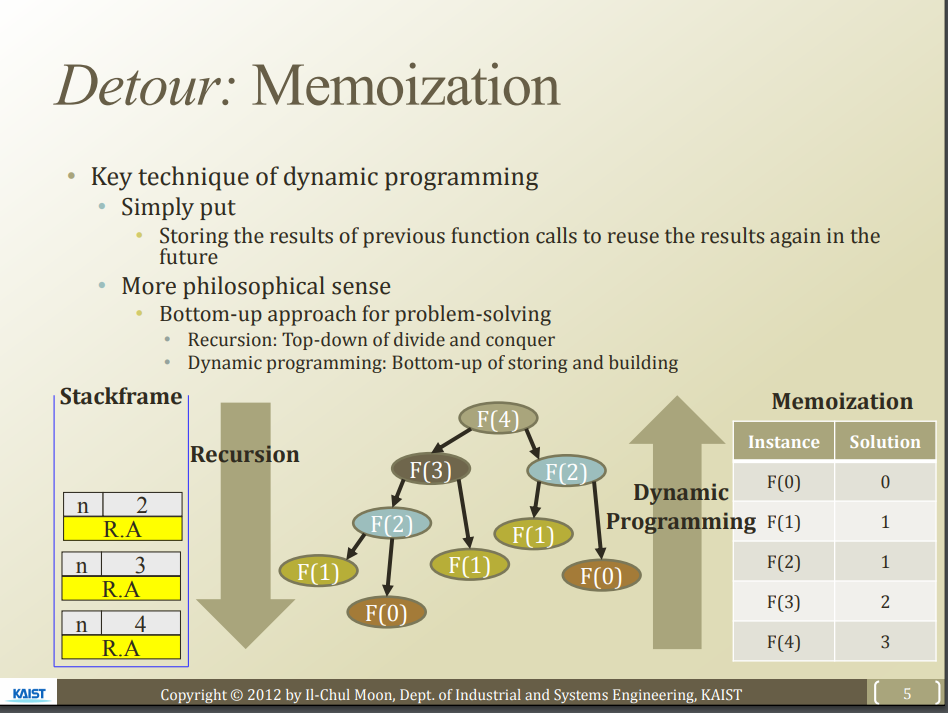

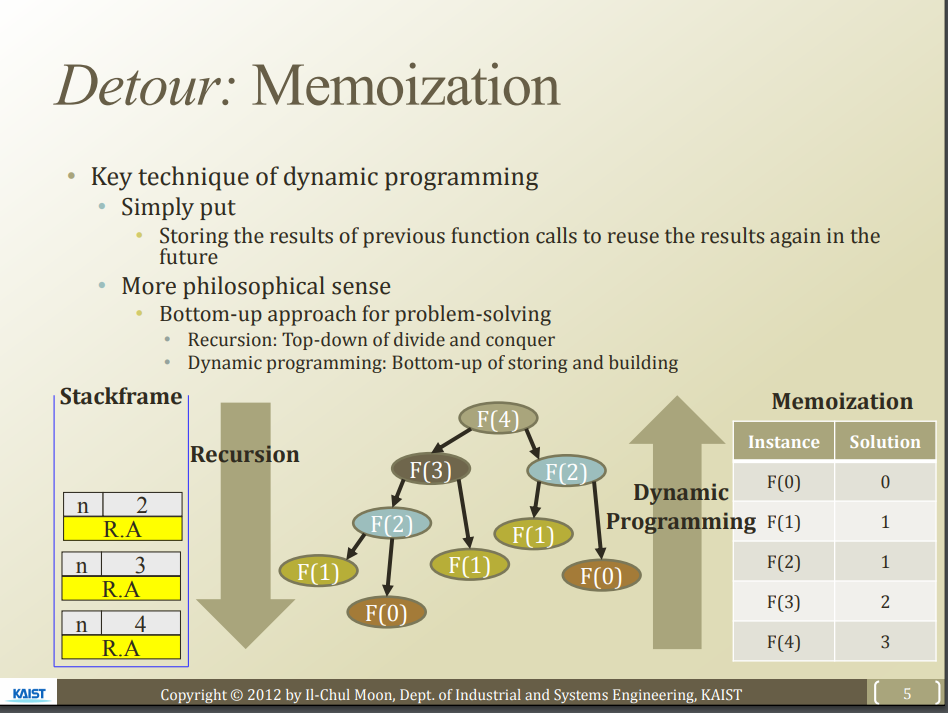

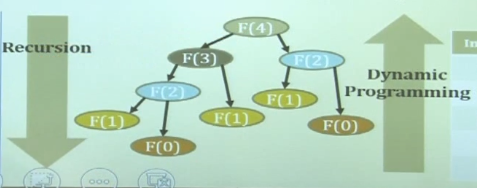

Recursion: top down

Dynamic programming bottom up

================================================================================

Recursion: top down

Dynamic programming bottom up

================================================================================

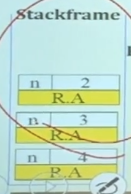

Recursion uses stackframe

================================================================================

Recursion uses stackframe

================================================================================

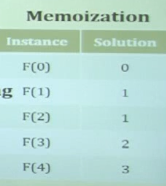

Dynamic programming uses memorization table

================================================================================

Dynamic programming uses memorization table

================================================================================

Forward probability calculation

$$$\alpha_t^k$$$: forward probability

dimension: time $$$\times$$$ states

================================================================================

Forward algorithm

- X and $$$\alpha_t^k$$$ is give, Z is unknown

- Forward algorithm answers "evaluation question"

================================================================================

Forward algorithm

- Initialize forward probability at time 1 (caculate forward probability in bottom like bottom-up dynamic programming)

$$$\alpha_1^k = b_{k,x_1} \pi_k$$$

- Use iterative fomular

- Iterate until time T to grow $$$\alpha_1$$$ to $$$\alpha_T$$$

$$$a_t^k = b_{k,x_t} \sum\limits_{i} \alpha_{t-1}^i \alpha_{i,k}$$$

- returns $$$\sum\limits_{i} \alpha_T^i$$$

- It is answer for evaluation question

================================================================================

Proof of correctness

$$$\sum\limits_{i} \alpha_T^i \\

= \sum\limits_{i} P(x_1,\cdots,x_T,z_T^i=1) \\

= P(x_1,\cdots,x_t)$$$

================================================================================

Where memorization table is used?

- When calculating forward probability $$$\alpha_t^k$$$

================================================================================

Limitation of the forward probability

Suppose your goal is to inference $$$z_2$$$ by using forward probability

inferenced probability for $$$z_2$$$ = forward probability (information=[x_1,x_2])

================================================================================

Limitation:

inferenced probability for $$$z_2$$$ is affected by also $$$x_3$$$

================================================================================

Backward probability

inferenced latent factor $$$z_2$$$ = inference latent factor.use.backward probability(information=[x_1,x_2,x_3,...])

================================================================================

Backward probability

inference latent factor z_2 = backward probability(information=whole sequence X)

================================================================================

Forward probability

inference latent factor z_2 = backward probability(information=[x_1, x_2])

================================================================================

Forward probability calculation

$$$\alpha_t^k$$$: forward probability

dimension: time $$$\times$$$ states

================================================================================

Forward algorithm

- X and $$$\alpha_t^k$$$ is give, Z is unknown

- Forward algorithm answers "evaluation question"

================================================================================

Forward algorithm

- Initialize forward probability at time 1 (caculate forward probability in bottom like bottom-up dynamic programming)

$$$\alpha_1^k = b_{k,x_1} \pi_k$$$

- Use iterative fomular

- Iterate until time T to grow $$$\alpha_1$$$ to $$$\alpha_T$$$

$$$a_t^k = b_{k,x_t} \sum\limits_{i} \alpha_{t-1}^i \alpha_{i,k}$$$

- returns $$$\sum\limits_{i} \alpha_T^i$$$

- It is answer for evaluation question

================================================================================

Proof of correctness

$$$\sum\limits_{i} \alpha_T^i \\

= \sum\limits_{i} P(x_1,\cdots,x_T,z_T^i=1) \\

= P(x_1,\cdots,x_t)$$$

================================================================================

Where memorization table is used?

- When calculating forward probability $$$\alpha_t^k$$$

================================================================================

Limitation of the forward probability

Suppose your goal is to inference $$$z_2$$$ by using forward probability

inferenced probability for $$$z_2$$$ = forward probability (information=[x_1,x_2])

================================================================================

Limitation:

inferenced probability for $$$z_2$$$ is affected by also $$$x_3$$$

================================================================================

Backward probability

inferenced latent factor $$$z_2$$$ = inference latent factor.use.backward probability(information=[x_1,x_2,x_3,...])

================================================================================

Backward probability

inference latent factor z_2 = backward probability(information=whole sequence X)

================================================================================

Forward probability

inference latent factor z_2 = backward probability(information=[x_1, x_2])