This is personal study note

Copyright and original reference:

https://www.youtube.com/watch?v=O1U2NWaSYn4&list=PLbhbGI_ppZISMV4tAWHlytBqNq1-lb8bz

================================================================================

There is no application with only forward probability and backward probability

================================================================================

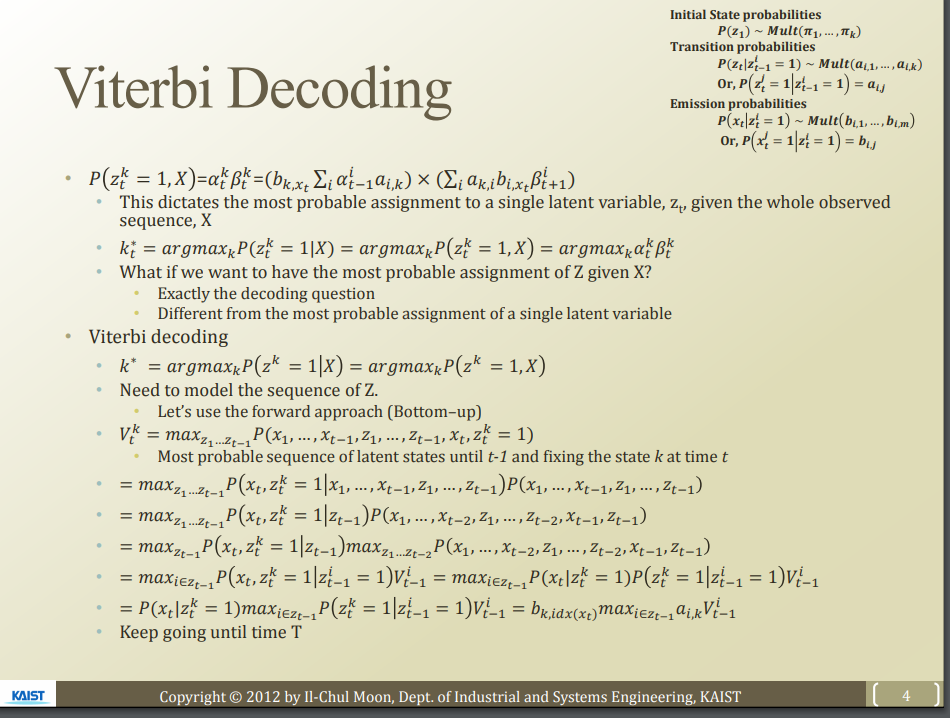

For decoding problem

- Viterbi decoding algorithm

================================================================================

================================================================================

================================================================================

Forward probability $$$\times$$$ backward probability

Forward probability $$$\times$$$ backward probability

================================================================================

Why did you create forward and backward probabilities?

For representing

================================================================================

Why did you create forward and backward probabilities?

For representing

It is joint probability between

prob of latent factor z in k-th cluster at time t and prob of X

================================================================================

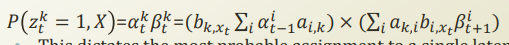

Forward and backward probabilities can be written in detail (recursive form, repeating problem)

It is joint probability between

prob of latent factor z in k-th cluster at time t and prob of X

================================================================================

Forward and backward probabilities can be written in detail (recursive form, repeating problem)

$$$\alpha_t^k$$$ reduces $$$\alpha_{t-1}^i$$$

And you use parameter a and b (emission and transition probabilities)

$$$\beta_{t+1}^i$$$: start with $$$t+1$$$, so it's also reduction in size from $$$\beta_{t}^k$$$

And you also use a and b

================================================================================

$$$\alpha_t^k$$$ reduces $$$\alpha_{t-1}^i$$$

And you use parameter a and b (emission and transition probabilities)

$$$\beta_{t+1}^i$$$: start with $$$t+1$$$, so it's also reduction in size from $$$\beta_{t}^k$$$

And you also use a and b

================================================================================

This becomes joint prob of "latent variable wrt specific time t" and X

================================================================================

Joint prob means you can use conditional prob

================================================================================

This becomes joint prob of "latent variable wrt specific time t" and X

================================================================================

Joint prob means you can use conditional prob

================================================================================

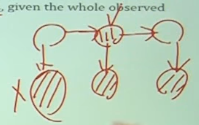

Problem:

you observe all Xs

But observations are used for one z

But you want to assign most probable assignment into all hidden labels Zs

Problem:

you observe all Xs

But observations are used for one z

But you want to assign most probable assignment into all hidden labels Zs

================================================================================

So, this is lack for above ultimate goal

================================================================================

So, this is lack for above ultimate goal

This is called "decoding question"

================================================================================

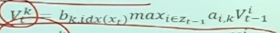

Viterbi decoding algorithm

This is called "decoding question"

================================================================================

Viterbi decoding algorithm

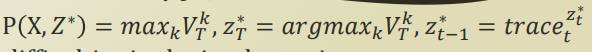

$$$k^*$$$: most probable assignment of specific cluster k

$$$P(z^k=1|X)$$$: all observations Xs are given, prob of $$$z^k$$$ ocurring

If you configure normalize term well, you can make following

$$$k^*$$$: most probable assignment of specific cluster k

$$$P(z^k=1|X)$$$: all observations Xs are given, prob of $$$z^k$$$ ocurring

If you configure normalize term well, you can make following

================================================================================

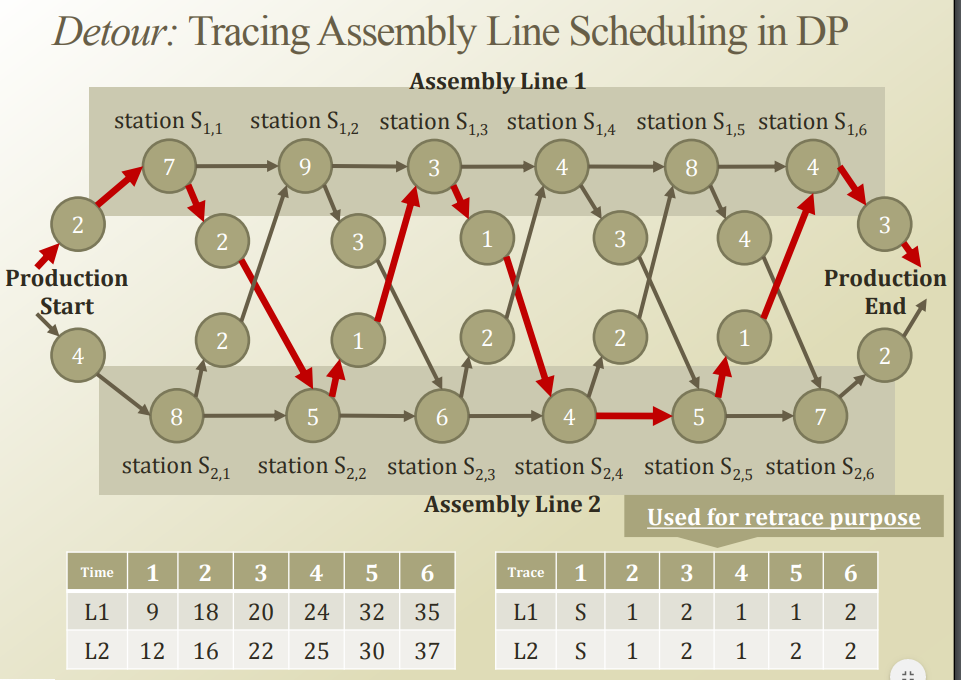

Now, you need to model entire sequence of Z

How to do it? Use dynamic programming

Fill Z values from left to right by using memorization tables

Perform retrace and get most probable likelihood Z sequence

================================================================================

Now, you need to model entire sequence of Z

How to do it? Use dynamic programming

Fill Z values from left to right by using memorization tables

Perform retrace and get most probable likelihood Z sequence

================================================================================

================================================================================

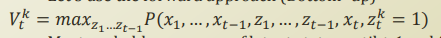

See

See

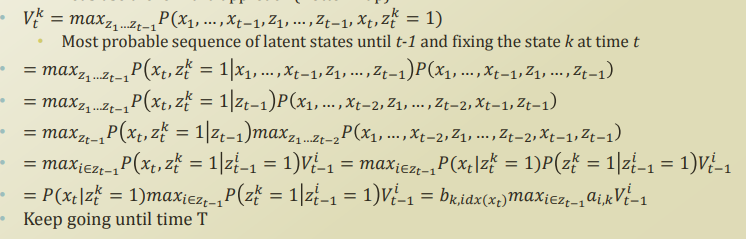

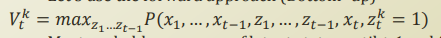

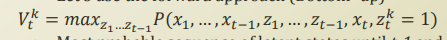

$$$x_1,\cdots,x_{t-1}$$$: all observations up to time $$$t-1$$$

$$$z_1,\cdots,z_{t-1}$$$: all latent variables up to time $$$t-1$$$

$$$x_t$$$: observation at time t

$$$z_t^k=1$$$: your goal to do, let's assign z into specific cluster k

================================================================================

$$$x_1,\cdots,x_{t-1}$$$: all observations up to time $$$t-1$$$

$$$z_1,\cdots,z_{t-1}$$$: all latent variables up to time $$$t-1$$$

$$$x_t$$$: observation at time t

$$$z_t^k=1$$$: your goal to do, let's assign z into specific cluster k

================================================================================

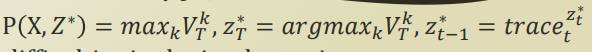

Change z values to maximize P

You get maximal prob P, and it's $$$V_t^k$$$

================================================================================

Do process

Change z values to maximize P

You get maximal prob P, and it's $$$V_t^k$$$

================================================================================

Do process

================================================================================

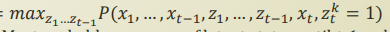

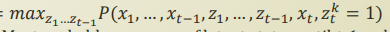

See this

================================================================================

See this

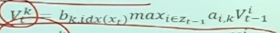

$$$V_t^k$$$ becomes "repeating problem form"

You can use dynamic programming for "repeating problem form"

================================================================================

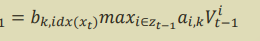

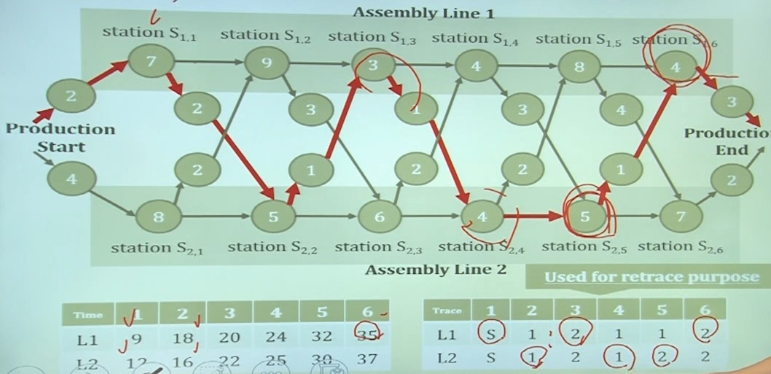

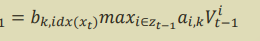

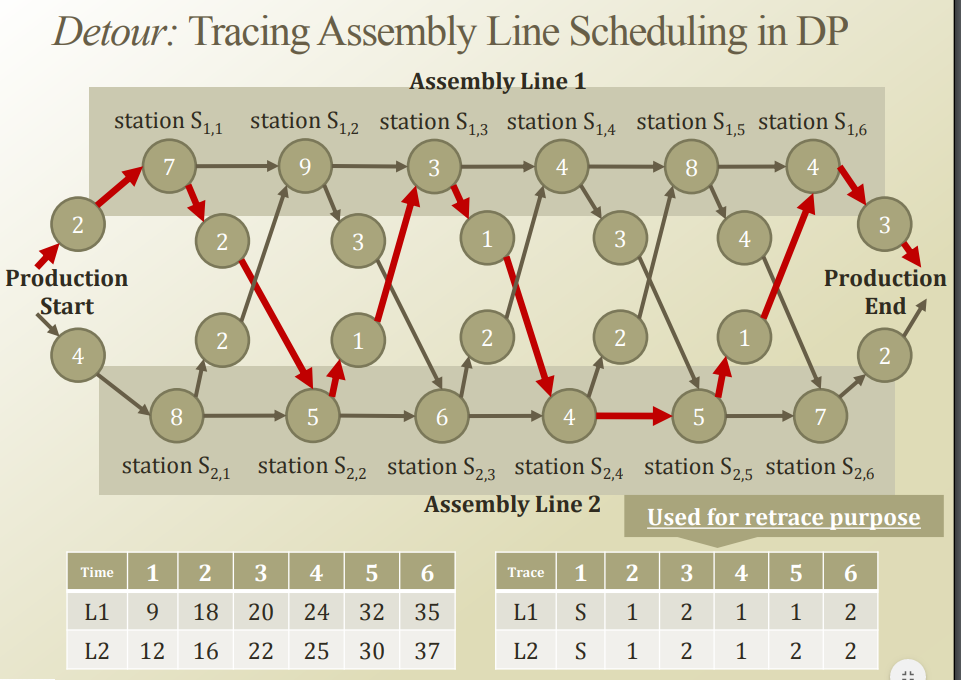

Car assembly line in the factory

$$$V_t^k$$$ becomes "repeating problem form"

You can use dynamic programming for "repeating problem form"

================================================================================

Car assembly line in the factory

left 2,4: starting point

right 3,2: ending point

Each number means took-time in minutes

When time is predicted too much, line can be transfered from A to B or B to A

================================================================================

Which routes would be the best?

left 2,4: starting point

right 3,2: ending point

Each number means took-time in minutes

When time is predicted too much, line can be transfered from A to B or B to A

================================================================================

Which routes would be the best?

2+7=9

4+8=12

================================================================================

Find optimial route

2+7=9

4+8=12

================================================================================

Find optimial route

================================================================================

Let's above process in terms of probability

You want to know $$$V_t^k$$$

$$$V_t^k$$$ is assignment by performing joint of time and states

Let's save 2 information of $$$V_t^k$$$

1. Trace information

2. probabilities information

================================================================================

Initialize Viterbi algorithm

================================================================================

Let's above process in terms of probability

You want to know $$$V_t^k$$$

$$$V_t^k$$$ is assignment by performing joint of time and states

Let's save 2 information of $$$V_t^k$$$

1. Trace information

2. probabilities information

================================================================================

Initialize Viterbi algorithm

Peform iteration to growth V

Peform iteration to growth V

You can calculate $$$V_t^k$$$ from $$$V_{t-1}^i$$$

You already know parameters a and b

================================================================================

Which assignment is the best?

Information on it is saved in trace

You can calculate $$$V_t^k$$$ from $$$V_{t-1}^i$$$

You already know parameters a and b

================================================================================

Which assignment is the best?

Information on it is saved in trace

================================================================================

You access T (entire sequence) from t

================================================================================

You access T (entire sequence) from t

================================================================================

Issue of Viterbi decoding algorith

* Frequent underflow problem

In real world, time step is large

But following a,b,V are all probabilities [0,1]

================================================================================

Issue of Viterbi decoding algorith

* Frequent underflow problem

In real world, time step is large

But following a,b,V are all probabilities [0,1]

Small values are multipled continuously

* To resolve it, calculate it in log domain

And multiplication is converted into summation

================================================================================

Summary

When $$$\pi,a,b,X$$$ are given

inference whole latent factor sequence Z? using Viterbi decoding algorithm

Small values are multipled continuously

* To resolve it, calculate it in log domain

And multiplication is converted into summation

================================================================================

Summary

When $$$\pi,a,b,X$$$ are given

inference whole latent factor sequence Z? using Viterbi decoding algorithm