Lecture note from following lectur.

https://www.youtube.com/watch?v=2QzwIThP8pw&list=PL9mhQYIlKEhdorgRaoASuZfgIQTakIEE9&index=4

N to N problem

Neural network takes N number of input,

and it should output N number of predictions for each N number of input

For example, suppose this sentence "Tom will go to the school on Friday"

That is sentence so it can be considered as multiple inputs

And the goal is to predict tags on each token

for example, "Tom" -> Name, "will" -> Verb, "go" -> Verb, ..., "Friday" -> Day

================================================================================

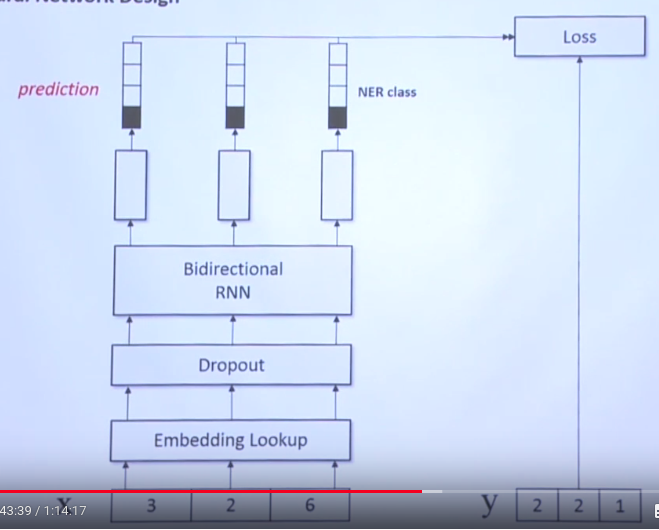

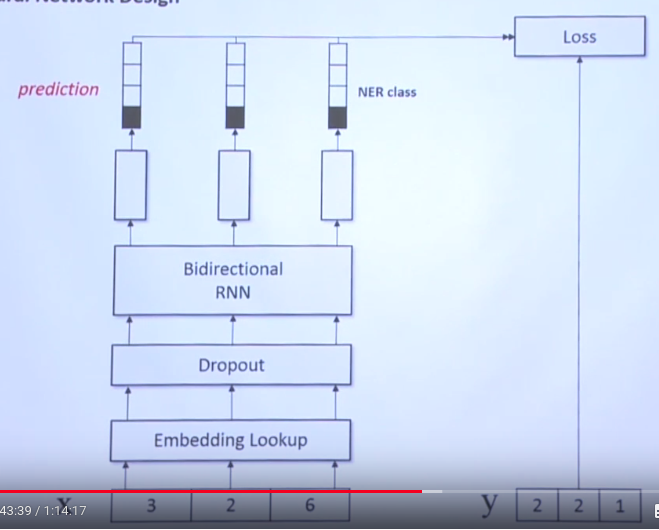

Training architecture

- Token Embedding Dimension: you can select like 50 dimension, 200 dimension, etc

to encode one token

- num_step: number of tokens which consists of one sentence

- target class: in this example, class is 4 for each token

================================================================================

Training architecture simplified version by help of embedding layer

- Token Embedding Dimension: you can select like 50 dimension, 200 dimension, etc

to encode one token

- num_step: number of tokens which consists of one sentence

- target class: in this example, class is 4 for each token

================================================================================

Training architecture simplified version by help of embedding layer

3,6,2,8,...: indices of token from word dictionary

3,0,0,1,...: indices of class for each token

================================================================================

3,6,2,8,...: indices of token from word dictionary

3,0,0,1,...: indices of class for each token

================================================================================

You find 3 losses and average them to create one scalar loss value.

================================================================================

You find 3 losses and average them to create one scalar loss value.

================================================================================