This is notes from video lecture of

https://www.youtube.com/watch?v=ogZi5oIo4fI&list=PLlMkM4tgfjnJ3I-dbhO9JTw7gNty6o_2m&index=13&t=0s

================================================================================

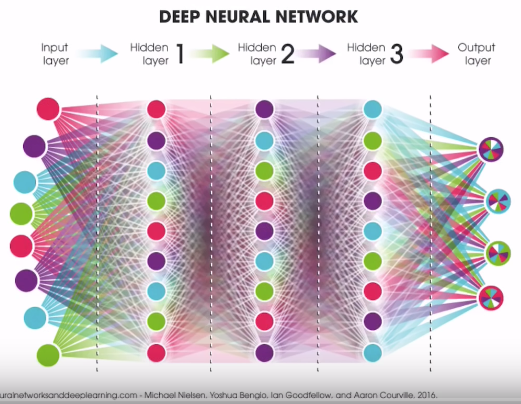

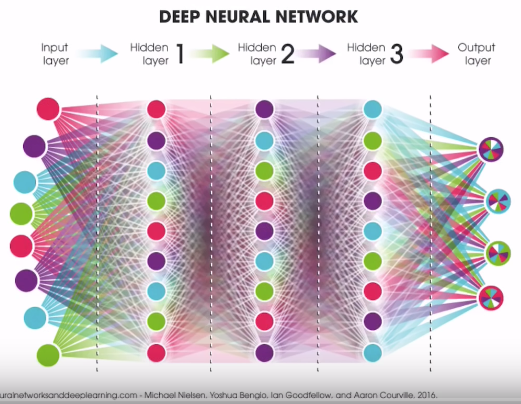

Deep Neural Network (DNN)

================================================================================

Deep Neural Network (DNN)

================================================================================

CNN is slightly different from DNN.

Instead of using all data in image,

CNN focuses on some certain areas, with extracting feature of image.

You will have many feature-image if you use many number of CNN image filters.

================================================================================

CNN is slightly different from DNN.

Instead of using all data in image,

CNN focuses on some certain areas, with extracting feature of image.

You will have many feature-image if you use many number of CNN image filters.

================================================================================

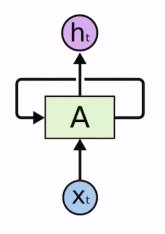

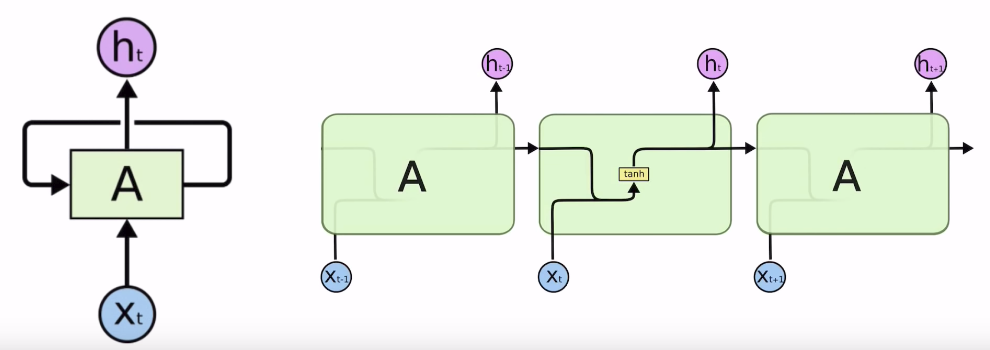

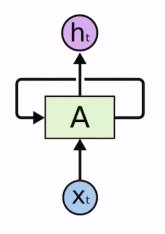

$$$X_t$$$: input data

$$$h_t$$$: output data

Center acrossing arrow: hidden state which is passed into next cell

================================================================================

$$$X_t$$$: input data

$$$h_t$$$: output data

Center acrossing arrow: hidden state which is passed into next cell

================================================================================

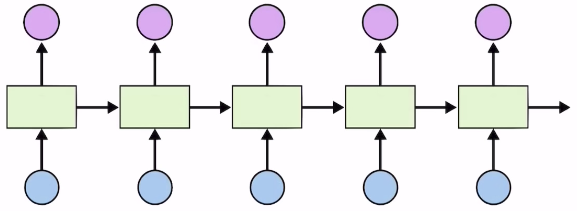

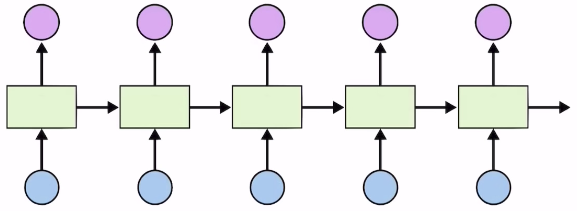

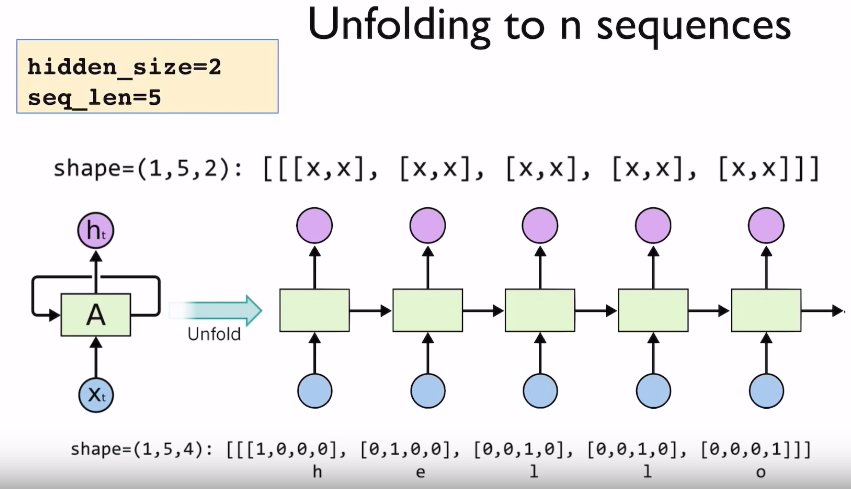

Unfolded view

Each one (green squres) has series of inputs (blue circles) like $$$x_1,x_2,...$$$

Each one (green squres) produces series of outputs (red circles) like $$$y_1,y_2,...$$$

Interesting thing in RNN is $$$y_1$$$ is passed into next cell (next green squre) as privious state

With $$$y_1$$$ and $$$x_2$$$, 2nd cell creates $$$y_2$$$

================================================================================

RNN can be used for series data, for example,

- Time series prediction

- Language modeling (text generation)

- Text sentiment analysis

- Named Entity Recognition (NER)

- Translation

- Speech recognition

- Music composition

================================================================================

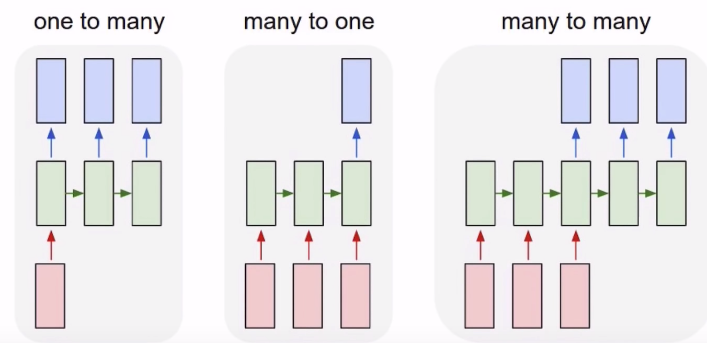

Various RNN models

Unfolded view

Each one (green squres) has series of inputs (blue circles) like $$$x_1,x_2,...$$$

Each one (green squres) produces series of outputs (red circles) like $$$y_1,y_2,...$$$

Interesting thing in RNN is $$$y_1$$$ is passed into next cell (next green squre) as privious state

With $$$y_1$$$ and $$$x_2$$$, 2nd cell creates $$$y_2$$$

================================================================================

RNN can be used for series data, for example,

- Time series prediction

- Language modeling (text generation)

- Text sentiment analysis

- Named Entity Recognition (NER)

- Translation

- Speech recognition

- Music composition

================================================================================

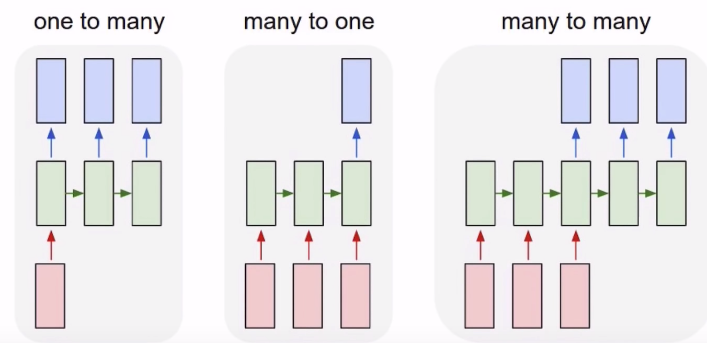

Various RNN models

- One to Many: Image captioning

One input image

Multiple captions on that image

- Many to One:

Sentence input which composed of multiple tokens

Output is one sentiment

- Many to Many: Translation

================================================================================

See the inside of RNN

- One to Many: Image captioning

One input image

Multiple captions on that image

- Many to One:

Sentence input which composed of multiple tokens

Output is one sentiment

- Many to Many: Translation

================================================================================

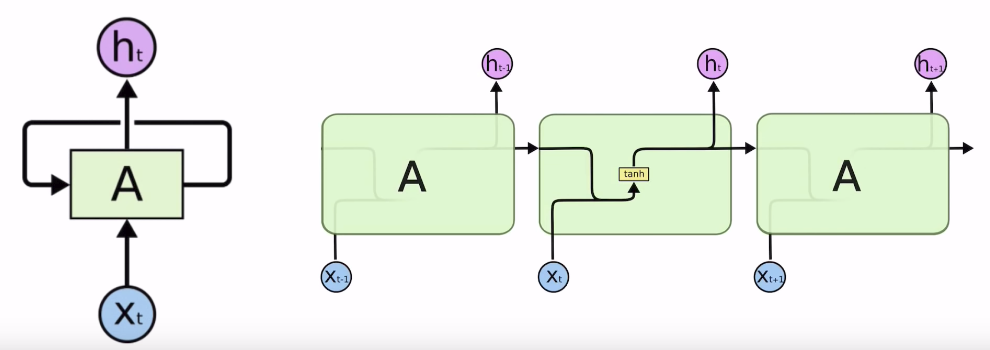

See the inside of RNN

Code:

Code:

mixed=Mat_mul(hidden_state_from_privious_cell,current_input)

after_tanh=tanh(mixed)

output,previous_state_for_next_cell=after_tanh

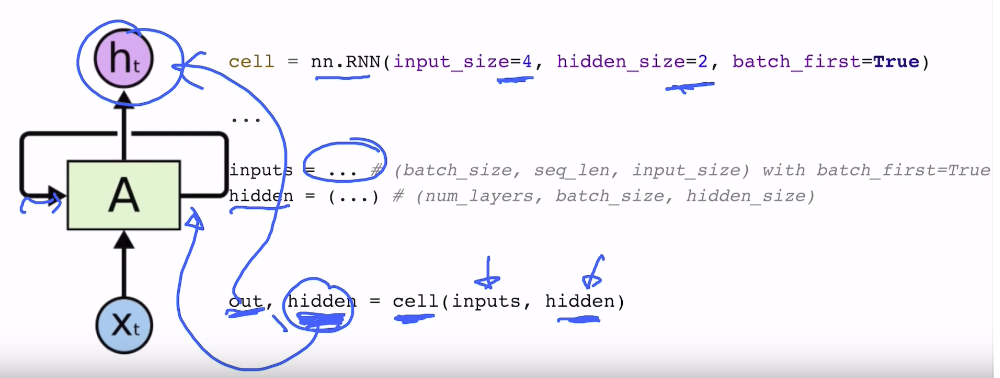

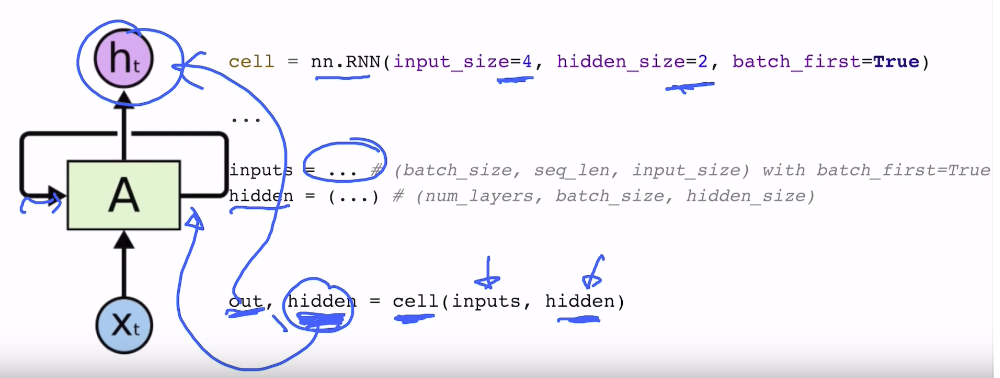

# c input_size: size of input data

# c hidden_size: size of output and previous-state-for-next-cell

# c batch_first: batch data first for your input data like (batch_size,sequence_length,input_size)

cell=nn.RNN(input_size=4,hidden_size=2,batch_first=True)

cell=nn.GRU(input_size=4,hidden_size=2,batch_first=True)

cell=nn.LSTM(input_size=4,hidden_size=2,batch_first=True)

Code:

Code:

cell=nn.RNN(input_size=4,hidden_size=2,batch_first=True)

inputs=prepare input data in here

hidden=initial hidden state data

# c out: h_t

# c hidden: previous state for next cell

out,hidden=cell(inputs,hidden)

# Implement next cell

# c inputs: input for this cell

# c hidden: is from previous cell

out,hidden=cell(inputs,hidden)

# c cell: one RNN cell

cell=nn.RNN(input_size=4,hidden_size=2,batch_first=True)

# ================================================================================

# c inputs: one letter as input, shape will be (1,1,4)

# Note that input_size=4 is same to 4 from (1,1,4)

inputs=autograd.Variable(torch.Tensor([[h]]))

# ================================================================================

# @ You initialize hidden state manually at initial time

# c hidden: hidden_size=2 should be same 2 from (1,1,2)

hidden=autograd.Variable(torch.randn(1,1,2))

# ================================================================================

# @ Feed one element to one cell

out,hidden=cell(inputs,hidden)

print(out)

# -0.1243 0.0738

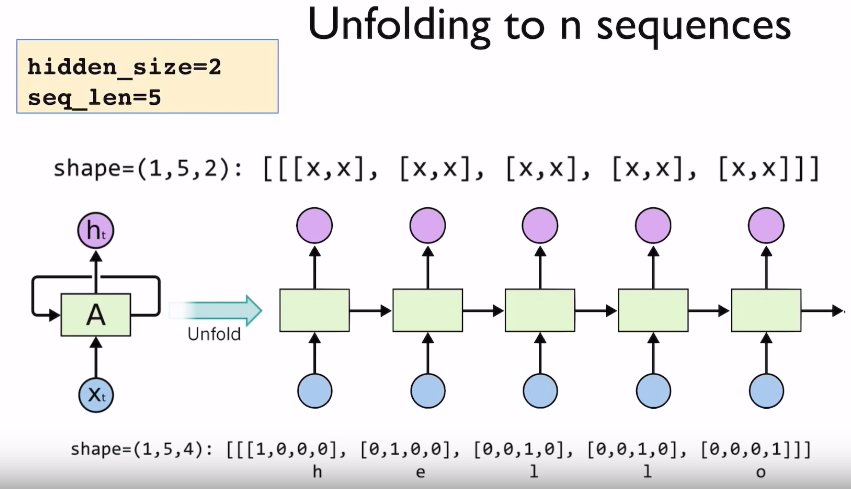

- seg_len=5 means 5 cells you use in RNN.

- Shape of output becomes (1,5,2)

1: batch size

5: number of cells as seq_len

2: output size

- Shape of input becomes (1,5,4)

================================================================================

Code:

- seg_len=5 means 5 cells you use in RNN.

- Shape of output becomes (1,5,2)

1: batch size

5: number of cells as seq_len

2: output size

- Shape of input becomes (1,5,4)

================================================================================

Code:

cell=nn.RNN(input_size=4,hidden_size=2,batch_first=True)

# ================================================================================

inputs=autograd.Variable(torch.Tensor([[h,e,l,l,o]]))

print(inputs.size())

# ================================================================================

hidden=autograd.Variable(torch.randn(1,1,2))

# ================================================================================

out,hidden=cell(inputs,hidden)

print(out.data)

================================================================================

Code:

================================================================================

Code:

cell=nn.RNN(input_size=4,hidden_size=2,batch_first=True)

# ================================================================================

batch_input=[

[h,e,l,l,o],

[e,o,l,l,l],

[l,l,e,e,l]]

inputs=autograd.Variable(torch.Tensor())

print(inputs.size())

# (3,5,4)

# ================================================================================

hidden=autograd.Variable(torch.randn(1,1,2))

# ================================================================================

out,hidden=cell(inputs,hidden)

print(out.data)

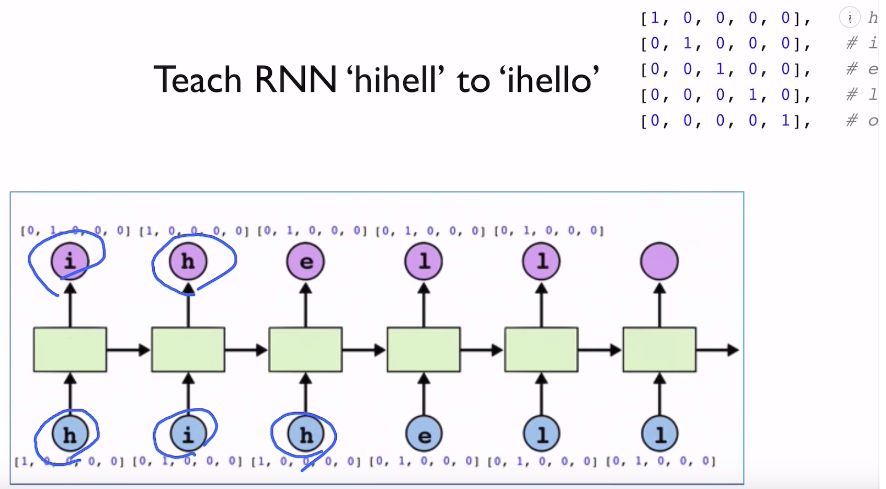

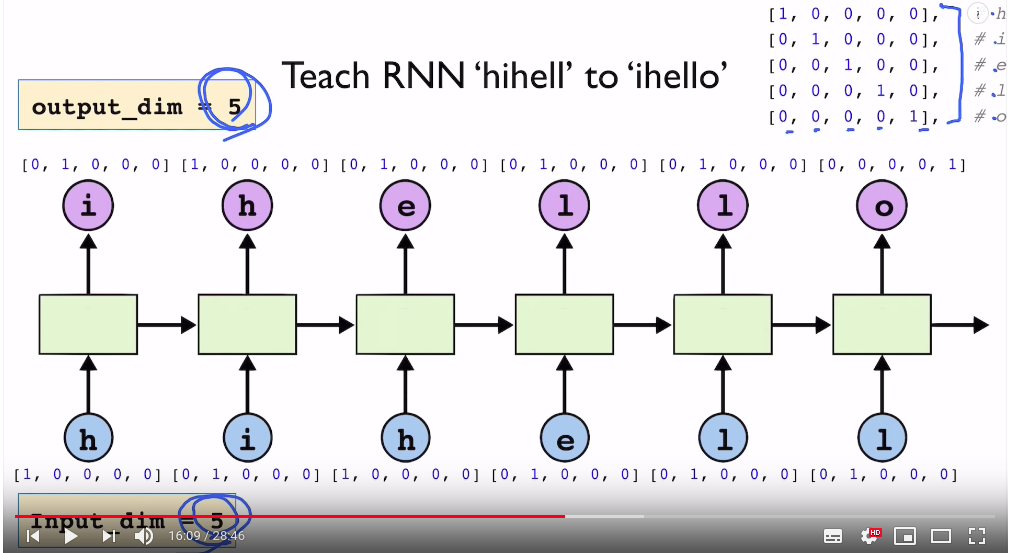

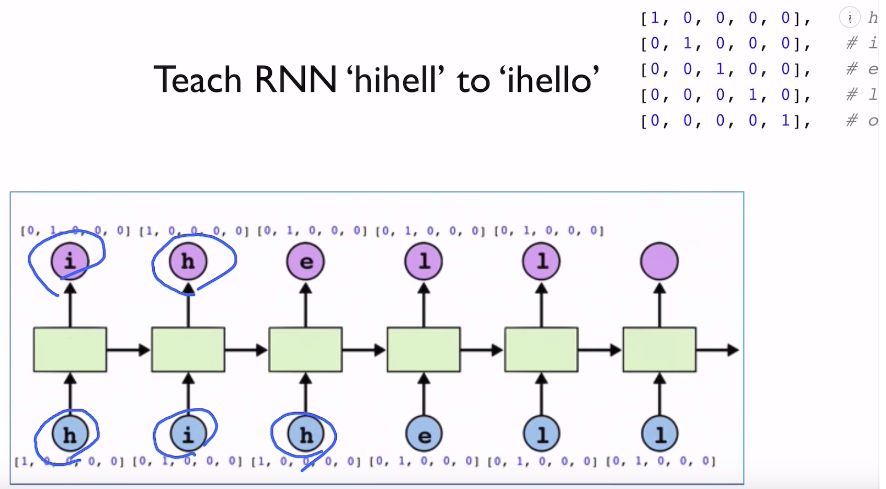

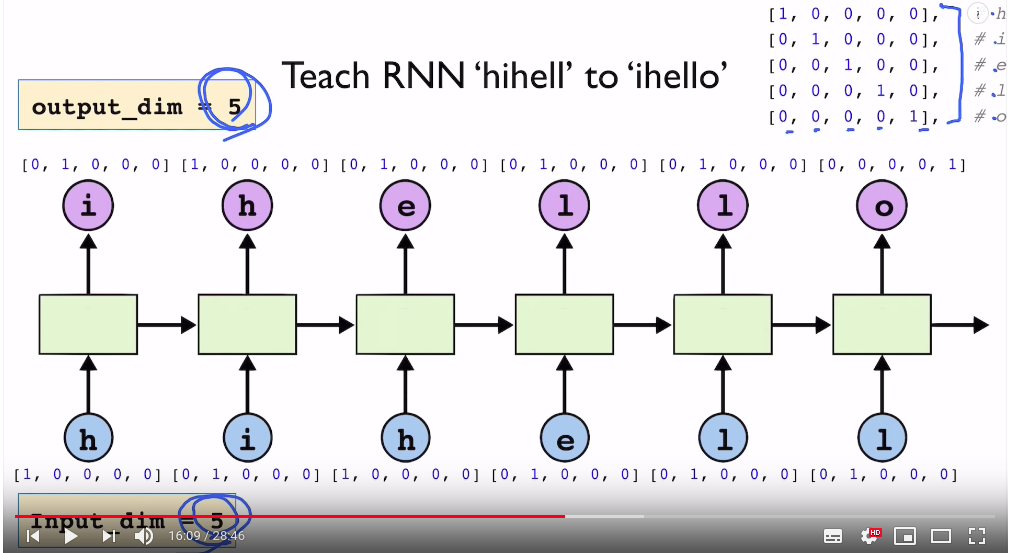

Teach RNN model to learn context of "ihello" from "hihell"

================================================================================

Teach RNN model to learn context of "ihello" from "hihell"

================================================================================

================================================================================

================================================================================

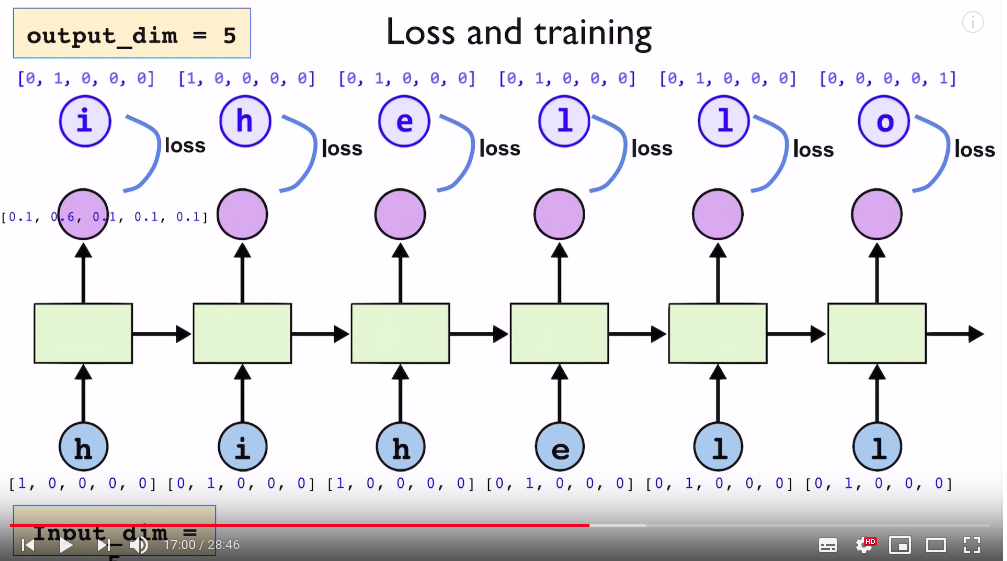

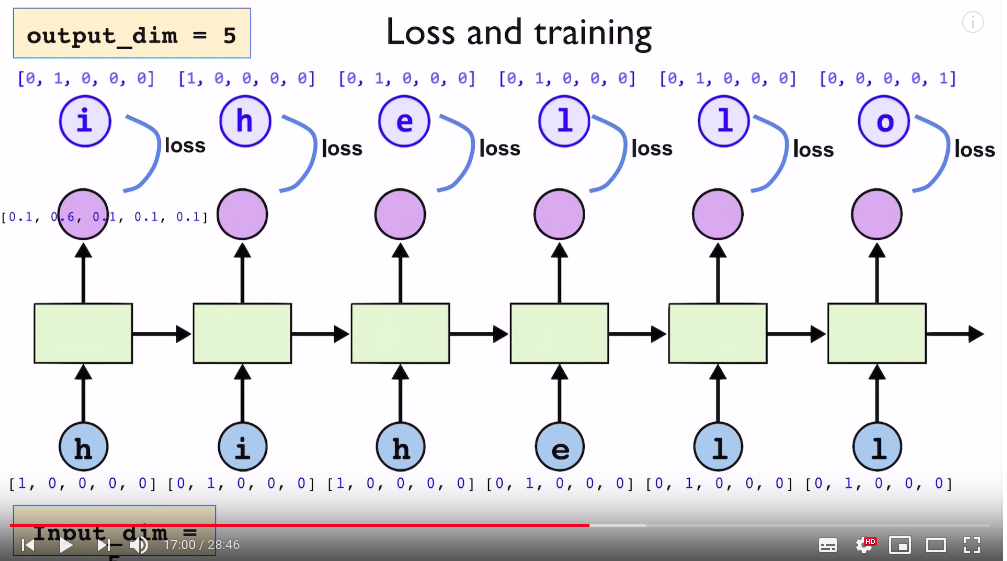

i,h,e,l,l,o are target $$$y$$$

Red cells are predictions $$$\hat{y}$$$

You use loss functions like cross entropy

Then, you can sum all those losses or you can average those losses

to create one scalar loss value

================================================================================

Example

Code:

i,h,e,l,l,o are target $$$y$$$

Red cells are predictions $$$\hat{y}$$$

You use loss functions like cross entropy

Then, you can sum all those losses or you can average those losses

to create one scalar loss value

================================================================================

Example

Code:

idx2char=['h','i','e','l','o']

# ================================================================================

# @ Teach RNN to know the conext of "hihell->ihello"

# c x_data: represents hihell

# c x_data: indices of each character from character dictionary idx2char

x_data=[0,1,0,2,3,3]

one_hot_lookup=[

[1,0,0,0,0],

[0,1,0,0,0]

[0,0,1,0,0]

[0,0,0,1,0]

[0,0,0,0,1]]

# c y_data: represents ihello

y_data=[1,0,2,3,3,4]

# c x_one_hot: convert x_data into one hot representation

x_one_hot=[one_hot_lookup[x] for x in x_data]

# ================================================================================

inputs=Variable(torch.Tensor(x_one_hot))

labels=Variable(torch.Tensor(y_data))

# ================================================================================

# @ Parameters

num_classes=5

input_size=5 # dimension of one hot representatio which encodes one character

hidden_size=5

batch_size=5

sequence_length=1 # perform tasks one by one, not using series of cells

num_layers=5 # one-layer RNN

# ================================================================================

# @ Model

class Model(nn.Module):

def __init__(self):

super(Model,self).__init__()

self.rnn=nn.RNN(input_size=input_size, hidden_size=hidden_size,batch_first=True)

def forward(self,x,hidden):

# Reshape input to (batch_size,sequence_length,input_size)

x=x.view(batch_size,sequence_length,input_size)

# --------------------------------------------------------------------------------

# Propagate input through RNN

out,hidden=self.rnn(x,hidden)

out=out.view(-1,num_classes)

return hidden,out

def init_hidden(self):

# Initialize hidden and cell states

# (num_layers*num_directions,batch,hidden_size)

return Variable(torch.zeros(num_layers,batch_size,hidden_size))